1.1. Virtual Machine Installation¶

Minimum Requirements¶

A server with an operating system installed and a KVM hypervisor are required for the virtual machine installation.

Recommended OS:

CentOS/RHEL/Oracle Linux 7

RHEL/Oracle Linux 8

Ubuntu 20.04

Minimum system requirements for the Virtual Machine:

2 processor cores

6GB RAM

2 network interfaces (management and user traffic)

List of supported NICs:

10GbE – Intel X520 / Intel X710 / Intel E810 / Mellanox ConnectX-6

25GbE – Intel X710 / Intel E810 / Mellanox ConnectX-5 / Mellanox ConnectX-6

40GbE – Intel XL710 / Mellanox ConnectX-6

50GbE – Intel E810 / Mellanox ConnectX-6

100GbE – Intel E810 / Mellanox ConnectX-5 / Mellanox ConnectX-6

200GbE – Mellanox ConnectX-6

NAT also supports virtual network adapters e1000, e1000e, virtio, and vmxnet3. However, their usage is not recommended in commercial environments due to their performance limitations.

Processor Requirements¶

The processor must support the AVX2 instruction set to run the Virtual Machine. All Intel Xeon processors starting from the Haswell (v3) family support this instruction set.

To check the processor model, lscpu utility can be used:

$ lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 56

On-line CPU(s) list: 0-55

Thread(s) per core: 2

Core(s) per socket: 14

Socket(s): 2

NUMA node(s): 1

Vendor ID: GenuineIntel

CPU family: 6

Model: 79

Model name: Intel(R) Xeon(R) CPU E5-2680 v4 @ 2.40GHz

Stepping: 1

CPU MHz: 2899.951

CPU max MHz: 3300.0000

CPU min MHz: 1200.0000

BogoMIPS: 4788.95

Virtualization: VT-x

L1d cache: 32K

L1i cache: 32K

L2 cache: 256K

L3 cache: 35840K

NUMA node0 CPU(s): 0-55

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc aperfmperf eagerfpu pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch epb cat_l3 cdp_l3 invpcid_single intel_ppin intel_pt tpr_shadow vnmi flexpriority ept vpid fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 erms invpcid rtm cqm rdt_a rdseed adx smap xsaveopt cqm_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local dtherm ida arat pln pts

The avx2 flag must be present in the Flags line. To easily check the presence of the flag, the following command can be used:

$ lscpu | grep avx2

If the command doesn’t display anything, it means that your processor doesn’t support the AVX2 instruction set, and you can not run the Virtual Machine on this processor.

Memory Requirements¶

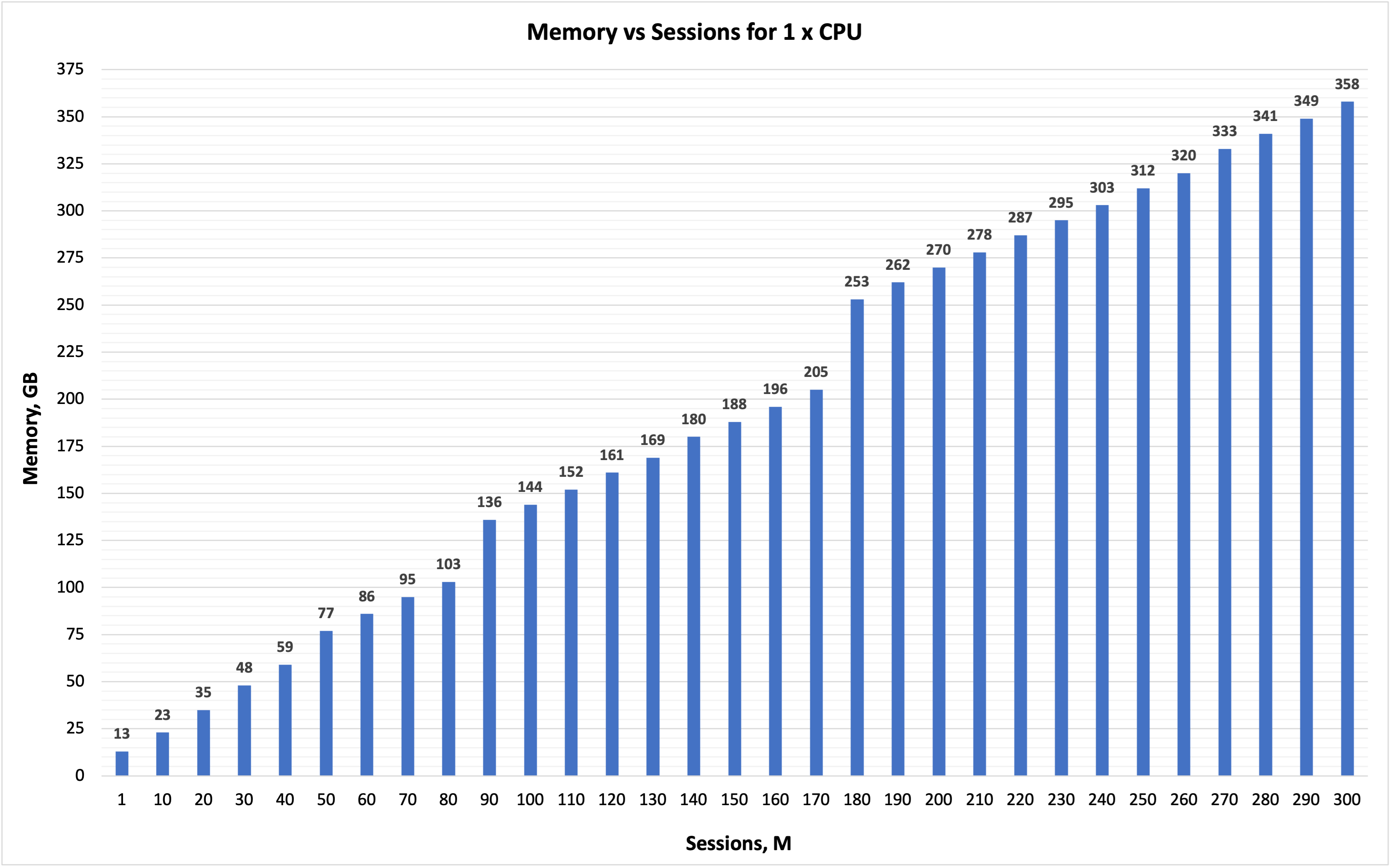

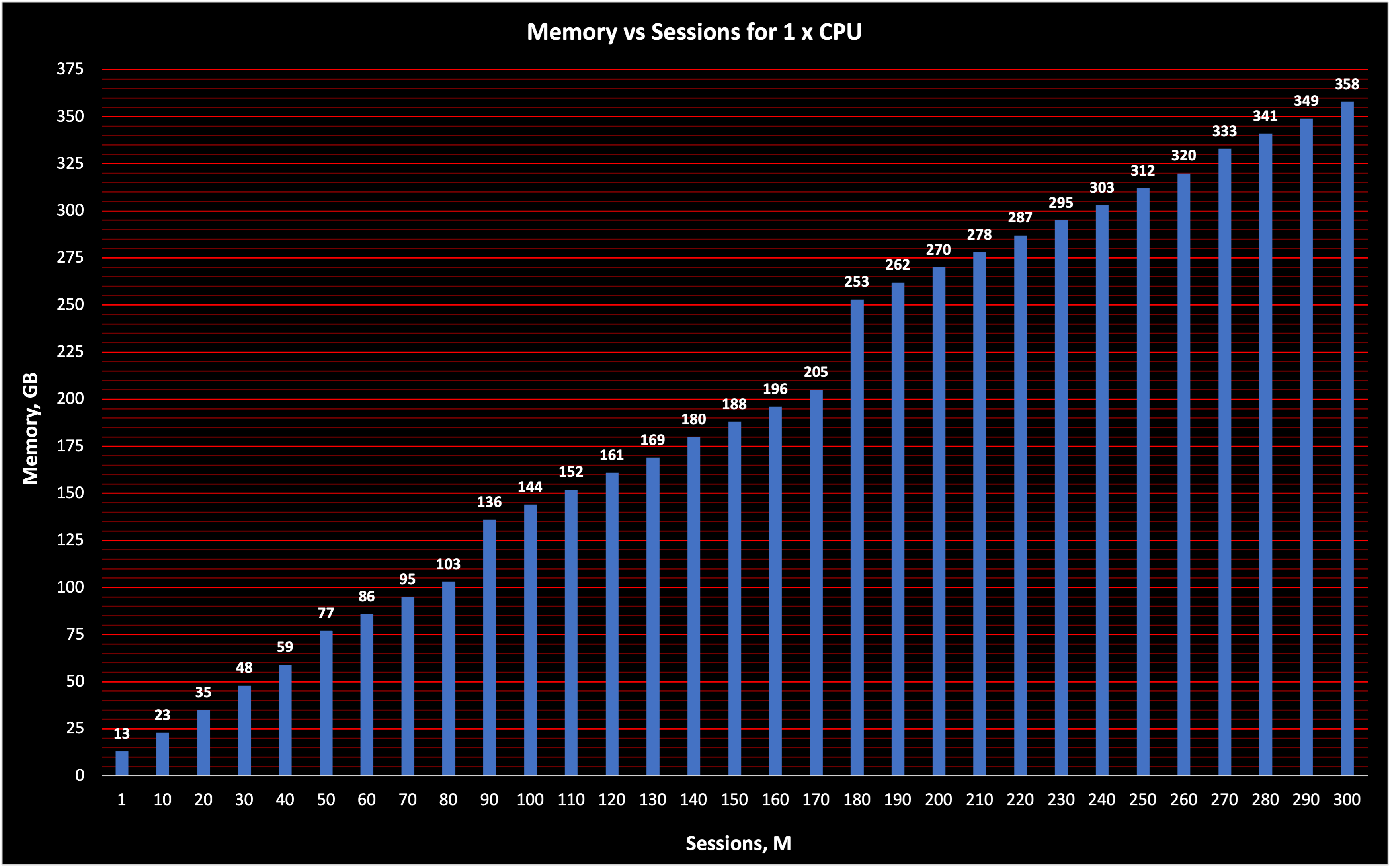

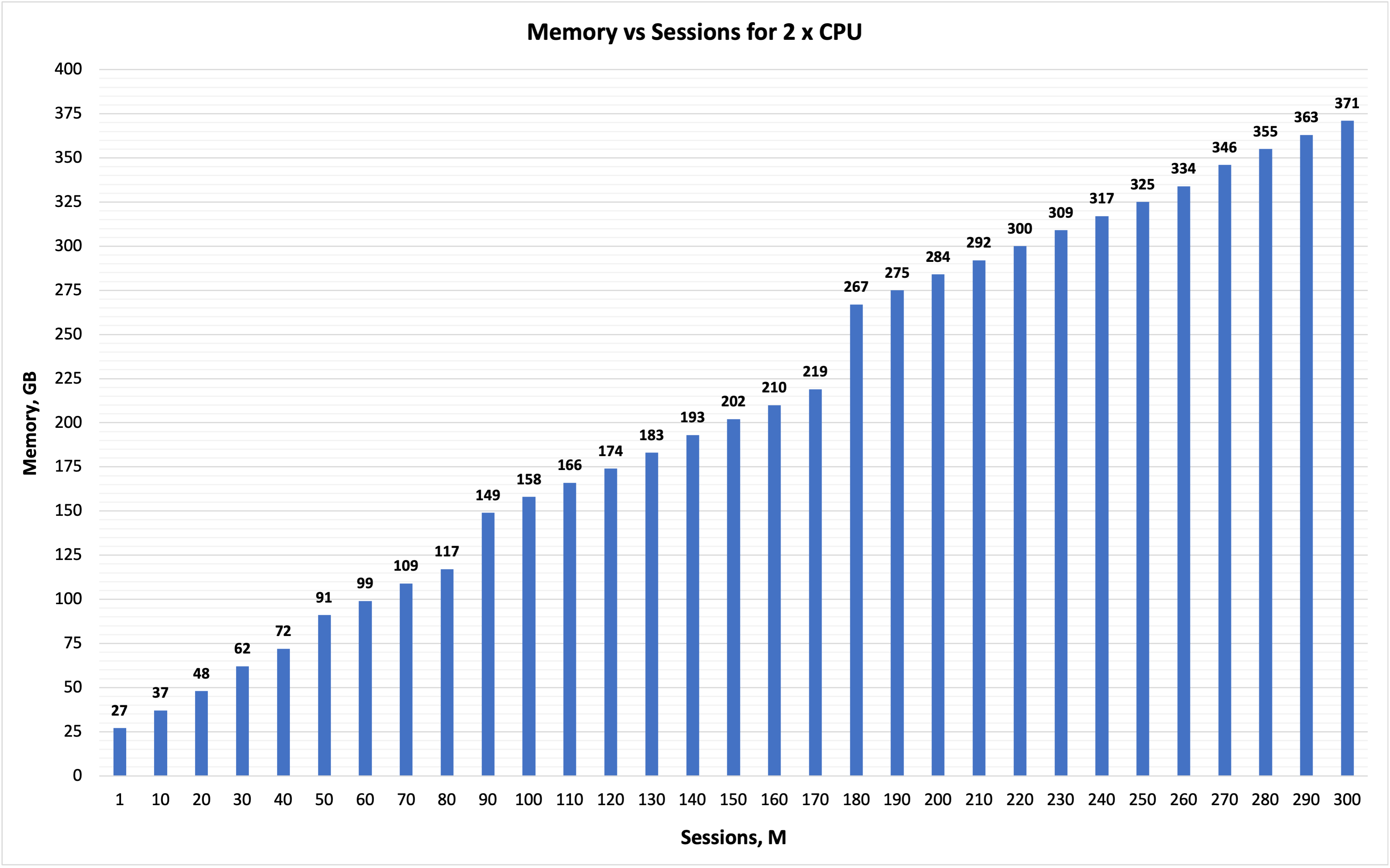

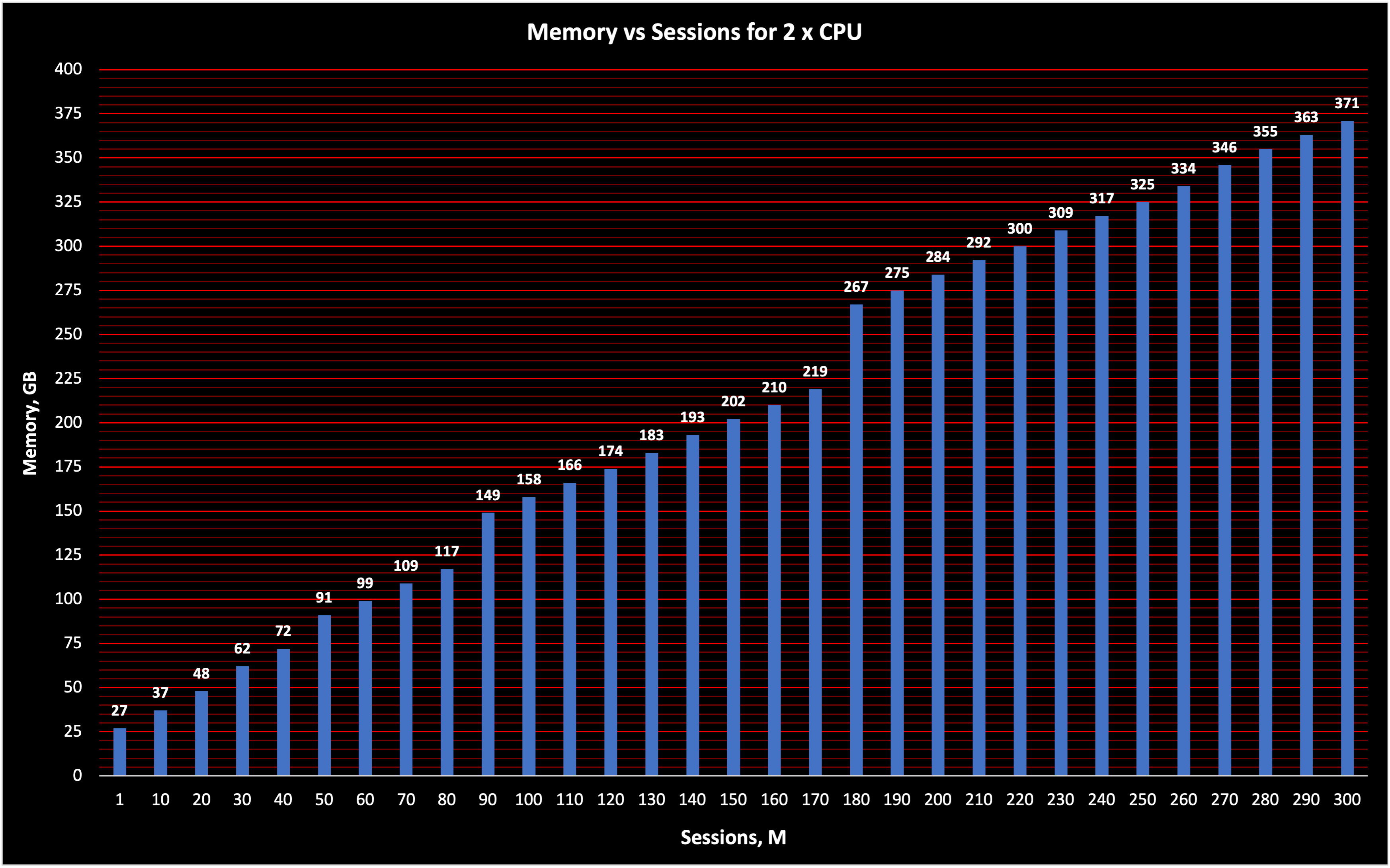

Here you can see the requirenents for memory vs sessions if you have 1 or 2 CPU. This memory recommendation is for VM, assuming that some memory has been reserved for Host OS previously.

Figure: Memory(GB) vs Session(M) for 1xCPU¶

Figure: Memory(GB) vs Session(M) for 1xCPU¶

Figure: Memory(GB) vs Session(M) for 2xCPU¶

Figure: Memory(GB) vs Session(M) for 2xCPU¶

Installation Steps¶

Before following the steps described in this chapter, make sure that the Linux minimal package is installed as the Host OS. Make sure that Turbo Boost mode has been disabled in the BIOS.

Network Configuration¶

Configure the Host OS network

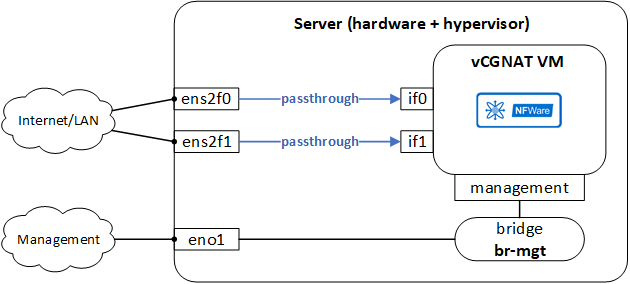

The NFWare VM has two interface types.

The first type is the management interface used for OAM. It does not require high throughput and can work via a Host OS bridge. The second type is Data Plane interfaces. Data Plane interfaces are passed through PCI directly from a server to the NAT virtual machine.

Figure 1. Network configuration¶

Figure 1. Network configuration¶

You need to create the bridge and place the interface used for management in that bridge before NFWare VM starts. Example of bridge interface creation:

br-mgt – the name of a bridge device

enp3s0f0 – the name of the NIC used to connect to a management network

In CentOS/Oracle Linux, the Network Manager utility can be used:

nmcli connection add type bridge ifname br-mgt bridge.stp no nmcli con add type ethernet con-name br-mgt-slave-1 ifname enp3s0f0 master br-mgt nmcli con modify bridge-br-mgt ipv4.method auto nmcli con up bridge-br-mgt nmcli con up br-mgt-slave-1

In Ubuntu, the Netplan utility can be used.

Open and edit the network configuration file *yaml under

/etc/netplan/.Network: ethernets: enp3s0f0: dhcp4: false version: 2 bridges: br-mgt: dhcp4: true interfaces: - enp3s0f0

To apply the settings, use this command:

netplan apply

Preparation¶

Install QEMU hypervisor, dependencies, and copy vCGNAT VM installation script.

CentOS 7¶

Install libvirt with dependencies:

yum install libvirtDownload the vCGNAT VM image to the following directories:

2.1. vCGNAT VM qcow2 image to the

/var/lib/libvirt/images/directory2.2. vCGNAT VM installation script to the

/var/lib/libvirt/images/hw-configuration/directoryInstall KVM hypervisor from a repository. The KVM hypervisor version must be 4.2 or more recent.

yum install centos-release-qemu-ev yum install qemu-kvm-ev

Install the following packages and dependencies from a repository:

xorriso

libosinfo

xz-devel

python3

zlib-devel

wget

pciutils

msr-tools

yum install xorriso libosinfo xz-devel python3 zlib-devel pciutils msr-toolsInstall the required packages and dependencies from a local directory:

virt-install

python

cd /var/lib/libvirt/images/hw-configuration/virt-install/CentOS-7 yum localinstall *

After installing the KVM hypervisor and packages, reboot the machine:

reboot

CentOS 8¶

Install libvirt with dependencies:

yum install libvirtDownload the vCGNAT VM image to the following directories:

2.1. vCGNAT VM qcow2 image to

/var/lib/libvirt/images/directory2.2 vCGNAT VM installation script to

/var/lib/libvirt/images/hw-configuration/directoryInstall KVM hypervisor from a repository. The KVM hypervisor version must be 4.2 or more recent.

yum install qemu-kvmInstall the following packages and dependencies from a repository:

msr-tools

yum install oracle-epel-release-el8.x86_64 yum install msr-tools

Install the required packages and dependencies from a local directory:

virt-install

cd /var/lib/libvirt/images/hw-configuration/virt-install/CentOS-8 yum localinstall *

After installing the KVM hypervisor and packages, reboot the machine:

reboot

Oracle Linux 7¶

Install libvirt with dependencies:

yum install libvirtDownload the vCGNAT VM image to the following directories:

2.1. vCGNAT VM qcow2 image to

/var/lib/libvirt/images/directory2.2. vCGNAT VM installation script to

/var/lib/libvirt/images/hw-configuration/directoryInstall KVM hypervisor, required packages, and dependencies from a repository. The KVM hypervisor version must be 4.2 or more recent.

yum install qemu-kvm yum install libpmem yum --disablerepo="*" --enablerepo="ol7_kvm_utils" update

Install the following packages and dependencies from a repository:

msr-tools

yum install msr-toolsInstall the required packages and dependencies from a local directory:

virt-install

python

cd /var/lib/libvirt/images/hw-configuration/virt-install/Oracle-Linux-8 yum localinstall *

After installing the KVM hypervisor and packages, reboot the machine:

reboot

Oracle Linux 8¶

Install Python3 module:

dnf module install python36Explicitly specify the version choosing

/usr/bin/python3:alternatives --config python3Install libvirt with dependencies:

yum install libvirtDownload the vCGNAT VM image to the following directories:

2.1. vCGNAT VM qcow2 image to

/var/lib/libvirt/images/directory2.2. vCGNAT VM installation script to

/var/lib/libvirt/images/hw-configuration/directoryInstall KVM hypervisor from a repository. The KVM hypervisor version must be 4.2 or more recent.

yum install qemu-kvmInstall the following packages and dependencies from a repository:

msr-tools

yum install oracle-epel-release-el8.x86_64 yum install msr-tools

Install the required packages and dependencies from a local directory:

virt-install

cd /var/lib/libvirt/images/hw-configuration/virt-install/Oracle-Linux-8 yum localinstall *

After installing the KVM hypervisor and packages, reboot the machine:

reboot

Ubuntu 20.04¶

Install libvirt with dependencies:

sudo apt install libvirt-daemon-systemDownload the vCGNAT VM image to the following directories:

2.1. vCGNAT VM qcow2 image to

/var/lib/libvirt/images/directory2.2. vCGNAT VM installation script to

/var/lib/libvirt/images/hw-configuration/directoryInstall KVM hypervisor from a repository. The KVM hypervisor version must be 4.2 or more recent.

sudo apt install qemu-kvmInstall the following packages and dependencies from a repository:

msr-tools

sudo apt install msr-toolsInstall the required packages and dependencies from a local directory:

virt-install

cd /var/lib/libvirt/images/hw-configuration/virt-install/Ubuntu-20.04 dpkg -i *

If you have errors that require to install the missing dependencies and broken packages, use

aptwith keyfix-broken install:apt --fix-broken install dpkg -i *

After installing the KVM hypervisor and packages, reboot the machine:

reboot

Ubuntu 22.04¶

Install libvirt with dependencies:

sudo apt install libvirt-daemon-systemDownload the vCGNAT VM image to the following directories:

2.1. vCGNAT VM qcow2 image to

/var/lib/libvirt/images/directory2.2. vCGNAT VM installation script to

/var/lib/libvirt/images/hw-configuration/directoryInstall KVM hypervisor from a repository. The KVM hypervisor version must be 4.2 or more recent.

sudo apt install qemu-kvmInstall the following packages and dependencies from a repository:

sudo apt install msr-tools gir1.2-appindicator3-0.1 genisoimageInstall the required packages and dependencies from a local directory:

virt-install

cd /var/lib/libvirt/images/hw-configuration/virt-install/Ubuntu-20.04 dpkg -i *

If you have errors that require to install the missing dependencies and broken packages, use

aptwith keyfix-broken install:apt --fix-broken install dpkg -i *

After installing the KVM hypervisor and packages, reboot the machine:

reboot

Running Configuration Script¶

The configuration script helps allocate server resources to the vCGNAT virtual machine and turns on the autostart for vCGNAT VM.

Run the configuration script in the GUI mode:

cd /var/lib/libvirt/images/hw-configuration/ ./configure

Note

Use character encoding UTF-8 in the console terminal if the GUI is displayed incorrectly.

Enter the path to a vCGNAT qcow2 image file.

For example: /var/lib/libvirt/images/

Select the CPU configuration mode: auto or manual

The auto mode is used for simple installations, for example, if there is just one vCGNAT virtual machine on an entire server. It is preferred in most cases.

Manual mode is used for complex installations, where several VMs share server resources.

Select a number of NUMA nodes (CPU) used for vCGNAT VM.

Select a number of total cores used for vCGNAT VM.

Minimum – two for single-CPU, or four for dual-CPU configurations.

It is recommended to leave at least one core for the host OS in single-CPU configurations or two cores in dual-CPU configurations.

For example:

Single-CPU and 20 total cores – 19 cores are allocated to vCGNAT VM

Dual-CPU and 72 total cores – 70 cores are allocated to vCGNAT VM

Enter vCGNAT VM name. Default name is nfware-vcgnat.

Enter memory settings for NUMA0 (CPU1).

Depending on a server’s total RAM, it is recommended set the following memory settings for the host OS:

Total RAM < 50GB – 2 GB per NUMA

Total RAM from 50GB to 100GB – 4 GB per NUMA

Total RAM from 100GB to 256GB – 8 GB per NUMA

Total RAM > 256GB — 16 GB per NUMA

For example: If total RAM is 187 GB, then 8 GB is to be used for the host OS, and 179 GB is to be allocated to vCGNAT VM.

Enter the memory settings for NUMA1 (CPU2).

It is recommended to use the NUMA0 configuration.

Select the NICs to use for the Data Plane.

Enter the management bridge name created in Network Configuration.

For example: br-mgt

The host configuration has been completed. Reboot the machine.

Platform Configuration¶

The platform configuration is the main part of vCGNAT that impacts the solution performance. To change a platform parameter, use the following command:

nfware# platform set <>

To apply the platform settings, reboot the VM:

nfware# reboot

The platform settings configuration process consists of three main sections:

Core settings

Subscriber settings

Other settings

Core Settings¶

Core settings define the configuration for each task type and apply separately to each CPU. For example, if you have allocated only 19 cores to vCGNAT VM in the previous step for single-CPU configuration, it means that 19 cores are to be used for platform settings. If you have a dual-CPU configuration and 70 cores are allocated, the number of cores to be used for platform settings must be divided by two. There are 35 cores to use for platform settings.

The vCGNAT has four types of cores. The total number of cores must be divided into these types:

- nb_cmd

nb_cmd cores are used for Control Plane (VM OS, CLI, routing protocols, BFD, SNMP, LACP, SSH).

- nb_rxtx

nb_rxtx cores are used for Data Plane (interface interaction, packet delivery to work cores, balancing).

- nb_work

nb_work cores are used to provide NAT functionality.

- nb_log

nb_log cores are used for sending NAT translation logs to a logging server.

Core Ratio Best Practice

nb_cmd: one core – most commonly, two cores – for large installations.

nb_work: 2-4 times more than nb_rxtx. Preferred ratio is 2.

nb_log uses the same cores as nb_work.

Example

For example, there are a total of 70 cores for vCGNAT VM on a dual-CPU configuration. Divided by 2 = 35 cores per CPU.

For nb_cmd we allocate 1 core, then the total for the rest will be 34.

nb_work should be 2–4 times more than nb_rxtx. Let’s take 3 as an example.

nb_rxtx = 34/3 ≈ 11,33

Round to a smaller even number = 10.

nb_work = 34 – 10 = 24

nb_log = nb_work = 24

Summary

VM cores = 70

per-socker cores = 35

nb_cmd = 1

nb_rxtx = 10

nb_work = 24

nb_log = 24

To configure these settings in NAT CLI:

nfware# platform set nb_cmd 1

nfware# platform set nb_rxtx 10

nfware# platform set nb_work 24

nfware# platform set nb_log 24

Core Settings for Mellanox ConnectX-6¶

For this NIC, the configuration of cores can be simplified. Calculating the number of cores for rxtx, work, and log tasks will not be necessary. The cores minus the cores for nb_cmd will be shared between these tasks without performance loss. To do this:

Specify the

sched_isolation_maskparameter equal to 1nfware# platform set sched_isolation_mask 1Specify the same number of cores for nb_rxtx, nb_work, and nb_log. For example, if the vCGNAT VM has 92 cores allocated on a dual-CPU server, then platform settings would be as follows:

nfware# platform set nb_cmd 2 nfware# platform set nb_rxtx 44 nfware# platform set nb_work 44 nfware# platform set nb_log 44

Subscriber Settings¶

Subscriber settings define how many subscribers and sessions NAT can hold. It depends on the memory size and license limitations.

nfware# platform set max_subscribers <>

nfware# platform set max_sessions <>

The maximum subscribers parameter is mandatory.

The maximum sessions is optional. If it is not specified, that parameter is set automatically:

maximum sessions = 200 * maximum subscribers

Other Settings¶

Other options contain several parameters used to optimize vCGNAT to have the best performance. Most commonly used:

A number of CPU memory channels

Check those in the CPU specification. By default, it is 4.

nfware# platform set nb_mem_channels <>A number of NIC RX default queue size (in a number of packets)

The recommended values are:

1024 for Intel 1/10/40G NICs,

2048 for Mellanox 100G NICs.

The default value is 1024.

nfware# platform set nb_rx_desc_default <>A number of NIC TX default queue size (in a number of packets)

The recommended values are:

1024 for Intel 1/10/40G,

2048 for Mellanox 100G.

By default, it is 1024.

nfware# platform set nb_tx_desc_default <>